Stereo Depth from Events Cameras: Concentrate and Focus on the Future

– Published Date : TBD

– Category : Event Stereo

– Place of publication : IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2022

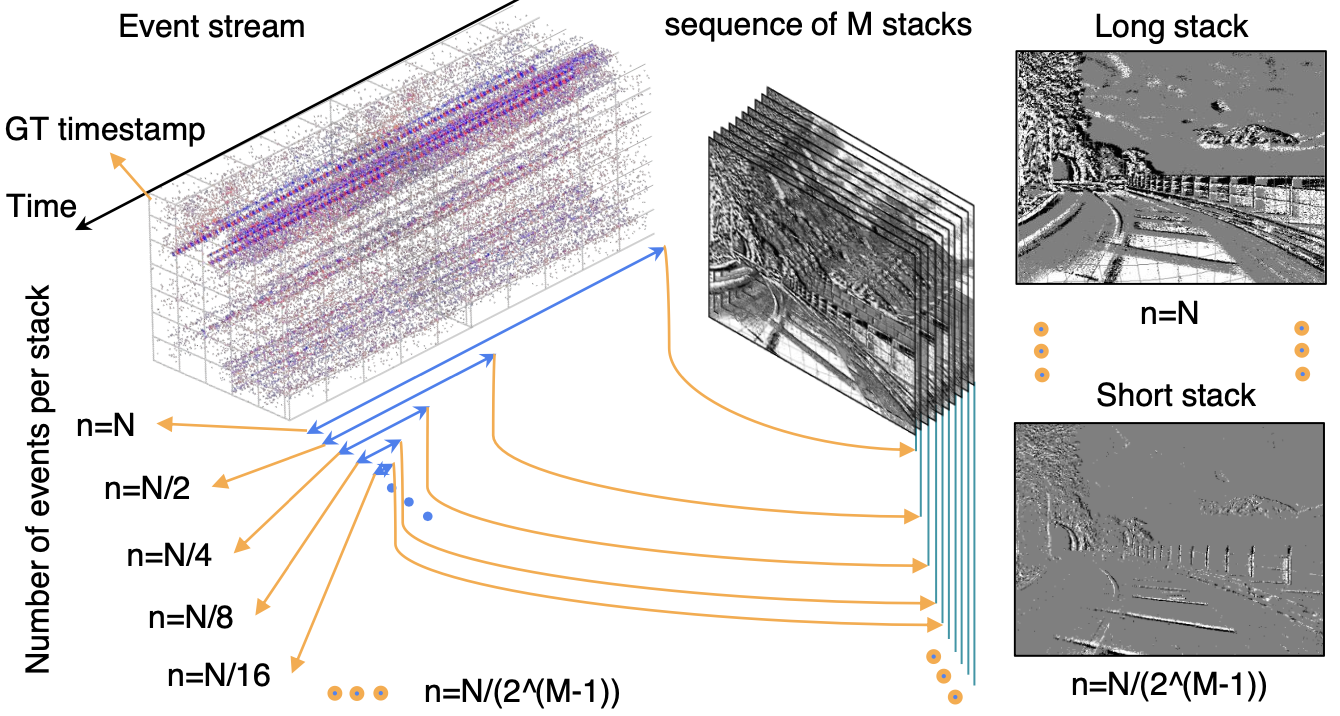

Neuromorphic cameras or event cameras mimic human vision by reporting changes in the intensity in a scene, instead of reporting the whole scene at once in the form of a single frame as performed by conventional cameras. Events are streamed data that is often dense when either the scene changes or the camera moves rapidly. The rapid movement causes the events to be overridden or missed when creating a tensor for the machine to learn on. Here, we propose to learn to concentrate on the dense event to produce a sharp compact event representation with high details for depth estimation. Specifically, we learn a model with events from both past and future but infer only with past data with the predicted future. We initially estimate depth in an event-only setting but also propose to further incorporate images and events by a hierarchical event and intensity combination network to predict higher quality depth. By experiments in challenging real-world scenarios, we validate the superiority of our method compared to prior arts.

Acknowledgement : This work was supported by the National Research Foundation of Korea(NRF) grant funded by the Korea government(MSIT) (NRF-2022R1A2B5B03002636).