Learning Adaptive Dense Event Stereo from the Image Domain

– Published Date : TBD

– Category : Event Camera

– Place of publication : IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2023

Abstract:

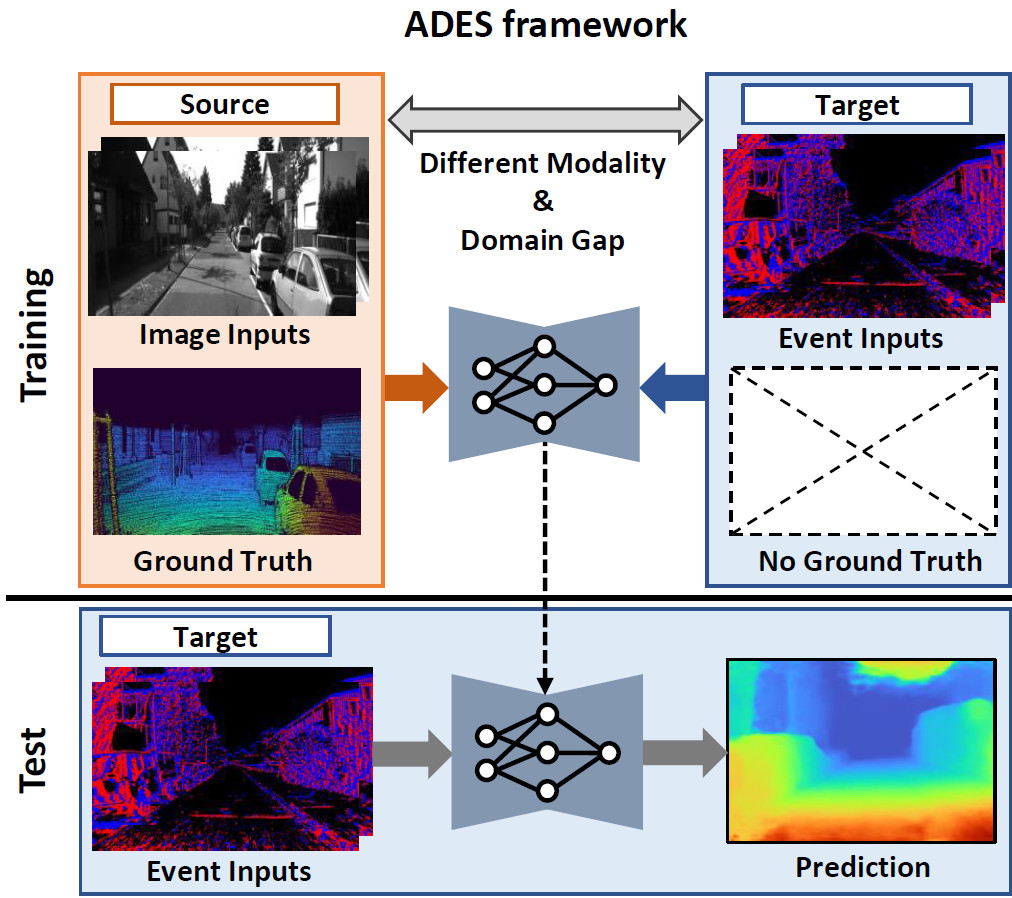

Recently, event-based stereo matching has been studied due to its robustness in poor light conditions. However, existing event-based stereo networks suffer severe performance degradation due to the domain gap. Unsupervised domain adaptation (UDA) aims at resolving this problem without using the target domain ground-truth. However, UDA still needs the input event data and ground-truth in the source domain, which is more expensive than image data. To tackle this issue, we propose a novel unsupervised domain Adaptive Dense Event Stereo (ADES), which resolves gaps between the different domains and input modalities. The proposed ADES framework adapts the event-based stereo network from abundant image datasets with ground-truth on the source domain to event datasets without ground-truth on the target domain, which is a more practical setup but challenging. First, we propose a smudge-aware self-supervision module, which self-supervises the network on the event target domain through the image reconstruction process. To remove intermittent artifacts in the reconstructed image, the process of self-supervision in the target domain is assisted by a smudge prediction network trained from the source domain. Secondly, we utilize the feature-level normalization scheme to align the extracted features along with the epipolar line. Finally, we present the motion-invariant consistency module to impose the consistent output between the perturbed motion. Our experiments demonstrate that our approach achieves remarkable results in the adaptation ability of event-based stereo matching from the image domain.