Joint Learning of 2D-3D Weakly Supervised Semantic Segmentation

– Author: Hyeokjun Kweon and Kuk-Jin Yoon

– Published Date: TBD

– Category: Weakly Supervised Semantic Segmentation

– Place of publication: The Conference on Neural Information Processing Systems (NeurIPS) 2022

Abstract

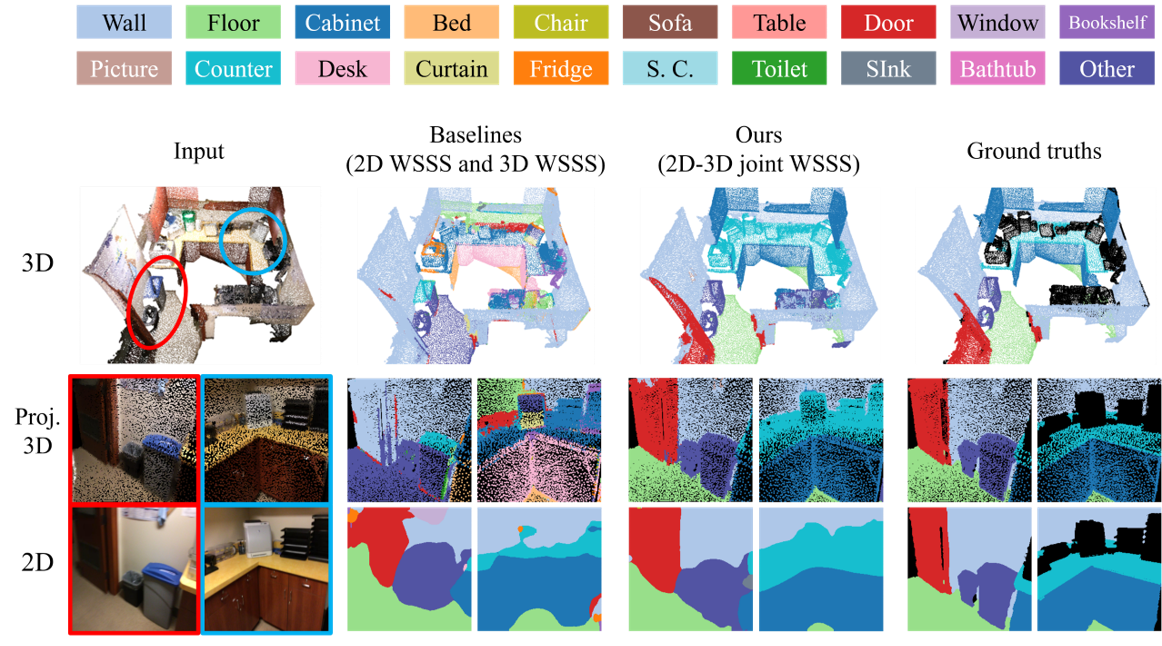

The aim of weakly supervised semantic segmentation (WSSS) is to learn semantic segmentation without using dense annotations. WSSS has been intensively stuided for 2D images and 3D point clouds. However, the existing WSSS studies have focused on a single domain, i.e. 2D or 3D, even when multi-domain data is available. In this paper, we propose a novel joint 2D-3D WSSS framework taking advantage of WSSS in different domains, using classification labels only. Via projection, we leverage the 2D class activation map as self-supervision to enhance the 3D semantic perception. Conversely, we exploit the similarity matrix of point cloud features for training the image classifier to achieve more precise 2D segmentation. In both directions, we devise a confidence-based scoring method to reduce the effect of inaccurate self-supervision. With extensive quantitative and qualitative experiments, we verify that the proposed joint WSSS framework effectively transfers the benefit of each domain to the other domain, and the resulting semantic segmentation performance is remarkably improved in both 2D and 3D domains. On ScanNetV2 benchmark, our framework significantly outperforms the prior WSSS approaches, suggesting a new research direction for WSSS.