Video-kMaX: A Simple Unified Approach for Online and Near-Online Video Panoptic Segmentation

– Published Date : TBD

– Category : Video Panoptic Segmentation

– Place of publication :IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop (CVPRW) on Transformers for Vision 2023

Abstract:

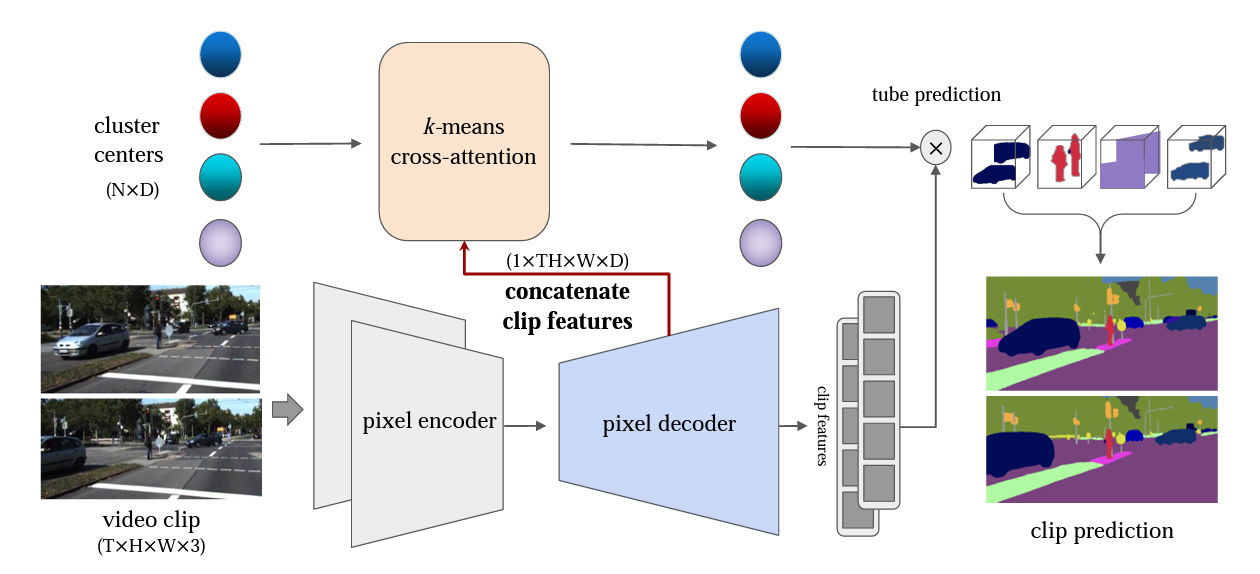

Video Panoptic Segmentation (VPS) aims to achieve comprehensive pixel-level scene understanding by segmenting all pixels and associating objects in a video. Current solutions can be categorized into online and near-online approaches, and each category has its own specialized designs. In this work, we propose a unified approach for online and near-online VPS. The meta architecture of the proposed Video-kMaX consists of two components: within-clip segmenter (for clip-level segmentation) and cross-clip associater (for association beyond clips). We propose clip-kMaX (clip k-means mask transformer) and LA-MB (location-aware memory buffer) to instantiate the segmenter and associater, respectively. Specifically, motivated by the modern k-means mask transformer, our clipkMaX regards the object queries as cluster centers for a clip, where each query is responsible for grouping together pixels of the same object within a clip. To achieve long-term association beyond the short clip length, our LA-MB stores the appearance and location features of tracked objects in a memory buffer. The association is then efficiently obtained in a hierarchical manner, starting from the video stitching for short-term association, followed by the memory decoding for long-term association. Our general formulation includes the online scenario as a special case by adopting clip length of one. Without bells and whistles, Video-kMaX sets a new state-of-the-art on KITTI-STEP and VIPSeg for video panoptic segmentation.